Artificial intelligence (AI) is the future of technology and humanity. With deep learning (DL) and machine learning (ML) by its side, it redefines how we code, work, think, perform, and even how we live.

‘Software is eating the world, but AI is going to eat Software’ – Jensen Huang, CEO of NVIDIA

It was hard to take these words seriously just two decades back, but if we look around at our world now and how its future is shaping up – yes, AI is the next big thing. But before we get there and ask why and how, let’s go back to how it all started.

Evolution of artificial intelligence (AI)

The 1950s were, in a way, the birth year of artificial intelligence (AI). First, Issac Asimov’s sci-fi story and Alan Turing’s ‘Turing test’ in his paper. From there on, humanity, scientists, mathematicians, and philosophers started warming up to the very concept of AI. They found some plausibility because a machine well-trained on data- can solve problems and decide, just like humans. Then came along a program from Allen Newell, Cliff Shaw, and Herbert Simon and RAND (Research and Development) Corporation, ‘The Logic Theorist’, which could mimic the problem-solving abilities of humans. The Dartmouth Summer Research Project on Artificial Intelligence (DSRPAI) became pivotal in this direction. AI kept taking such baby steps from 1957 to 1974 as machine learning algorithms evolved, and efforts like Newell and Simon’s General Problem Solver and Joseph Weizenbaum’s ELIZA went ahead in problem-solving and interpreting spoken language. AI also saw its rough phases – in the first winter of AI from 1974 to 1980 and the second winter of AI from 1987 to 1993.

As both algorithms and funds exploded towards AI, more and more expert systems started emerging. The 1990s and 2000s saw many milestones for AI. From IBM’s Deep Blue victory over the reigning world chess champion Gary Kasparov to Google’s Alpha Go win over Chinese Go champ, Ke Jie – AI dug its feet even deeper. We saw a Stanford vehicle cracking the DARPA challenge with an autonomous vehicle for 130 miles and also a Google brain-computer cluster that trained itself to recognize cat images from millions of YouTube images. Today, we have intelligent personal assistants and driverless cars and whatnot that signifies that AI is more than Issac’s sci-fi fiction.

Different forms and methods of artificial intelligence (AI)

But AI is not vanilla. It has many stripes. Narrow AI wherein AI can perform specific tasks the way or better than humans can. Second, General AI is where machines become human-like, make their own decisions, and learn with no human input. This is where AI transcends logic and enters emotions. And finally, there is the realm of superintelligence where AI is way ahead of humans from creativity to social skills.

AI vs. machine learning (ML) vs. deep learning (DL)

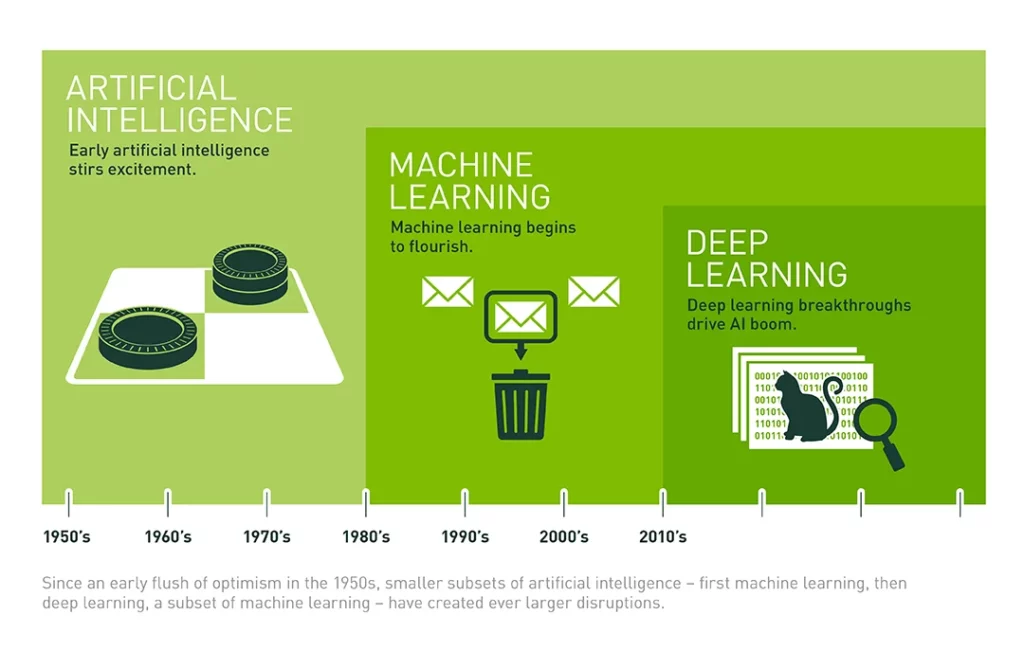

Yes, at times, they are used interchangeably, but they are different. AI is about building intelligent programs and machines to solve problems. Its subset is machine learning (ML) that helps systems to automatically learn and improve from experience without explicit programming. Here, the hand-coding is skipped because the machine is “trained” with large amounts of data and algorithms.

Source: Nvidia

Deep learning (DL) is a subfield of machine learning. It is inspired by the structure of a human brain and imitates the way humans gain certain types of knowledge. It uses neural networks to analyze various factors with a structure similar to the human neural system.

Benefits of artificial intelligence (AI) for society and its future

A quick look at the numbers shows just how fast and broad AI is growing and entering our work and personal lives. International Data Corporation (IDC) predicts by 2024, the worldwide revenues for the artificial intelligence (AI) market, including software, hardware, and services is expected to break the $500 billion mark with a five-year compound annual growth rate (CAGR) of 17.5% and total revenues reaching an impressive $554.3 billion. We can also see that the use-cases of AI are going to be visible in all aspects of business and life.

- Historic rain trends

- Clinical imaging

- Cognitive applications for tagging, clustering, categorization, hypothesis generation, alerting, filtering, navigation, and visualization

- Healthcare areas like robot-assisted surgery, dosage error reduction, virtual nursing assistants, clinical trial participant identifier, hospital workflow management, preliminary diagnosis, and automated image diagnosis

- BFSI areas like financial analysis, risk assessment, and investment/portfolio management solicitations

- Crime control

- Driverless transport

- Augmentation of human capabilities in multiple areas

What is also remarkable is the massive interest and investments that tech giants like Amazon, Google, Apple, Facebook, IBM, and Microsoft are showing in research and development of AI, especially for making AI more accessible for enterprise use-cases.

From Artificial Intelligence to Superintelligence

But as the McKinsey report ‘The State of AI-2020’ shows, AI high performers most often say their models have mis-performed within the business functions where AI is used most. This is very high for marketing and sales (32 percent), followed by product and/or service development (21 percent) and service operations (19 percent). This hints at a significant need for expertise that can handle and leverage AI productively and positively. For technology professionals, there is a vast field of opportunities opening up here. Whether it is data or software, or hardware – the possibilities are endless. We would see a significant uptick in AI frameworks, algorithms, and research with next-generation computing architectures, with access to the rich dataset. We would see applications for primitives, linear algebra, inference, sparse matrices, video analytics, and multiple hardware communication capabilities. Even in the hardware space, there is the rise of chipsets such as Graphics Processing Unit (GPU), CPU, application-specific integrated circuits (ASIC), and field-programmable gate array (FPGA).

AI will change the software and hardware we use – and that means it’s time to build your AI, machine learning, and deep learning muscles and make the most of this new era.

India

India  USA

USA